SRDR+ 5.5. What Other Fields Do I Need to Extract?

There are three other tabs you will need to set up to extract data from your articles: Sample Characteristics, Outcome Details, and Risk of Bias Assessment.

- 5.5.1. Sample Characteristics

- 5.5.2. Outcome Details

- 5.5.3 Risk of Bias Assessment

- 5.5.4 Repeating the Question by Arm or Outcome

5.5.1. Sample Characteristics

There are a set of questions about the makeup of a study’s sample that will apply to pretty much any systematic review project:

- Age of the participants

- Sex (and/or gender) makeup of the sample

- N recruited (this is likely to be different than the N analyzed that you’ll capture in the Results tab)

- Dropout rate

Additionally, you will likely want to add a few questions that are specific to your question or study population. What might these be? Think about what sample characteristics might possibly affect the results of the study.

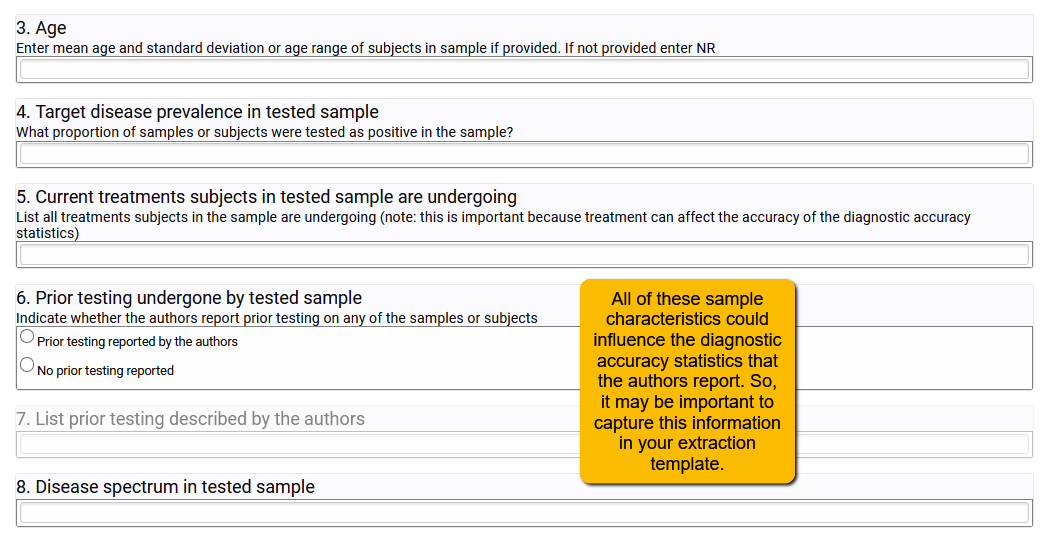

For instance, if you are carrying out a Diagnostic Accuracy systematic review, consider questions like these:

So, take some time to think about what other sample characteristics might plausibly affect the results. Best to capture those up front than wish you had later!

5.5.2. Outcome Details

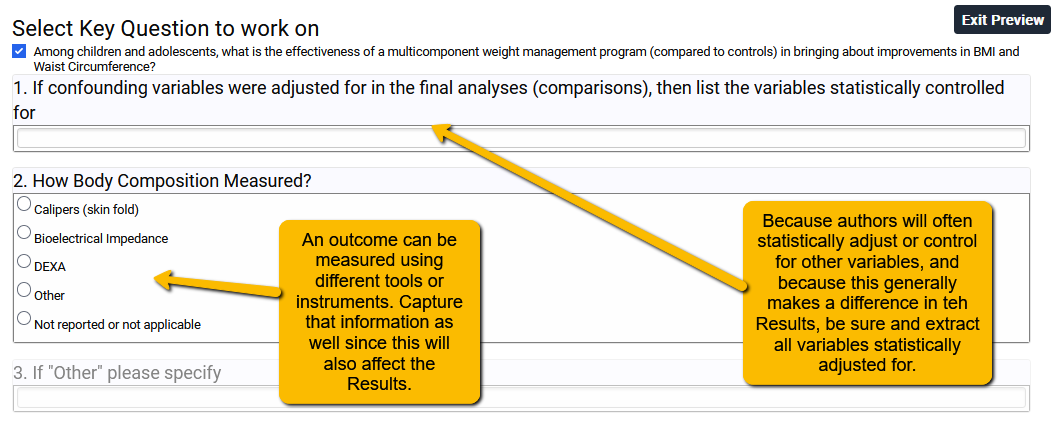

Use the Outcome Details tab to collect information about (1) any covariates that the authors statistically controlled or adjusted for in the analyses, and (2) different tools that may be used to measure the same outcome.

Note that in the example above, the confounding variables question (Question 1) is free text since authors could statistically adjust for a broad range of variables. If in your project there are only one or two key confounders that should be adjusted for, then you may be able to get by with a structured question (e.g., radio button or checkbox).

In Question 2, I know ahead of time which tools are commonly used to measure body composition in this area of research. Because of this, I can use a structured question. If you are unfamiliar with a field and so don’t know the common measures, pay close attention to these as you do your full text screening. What you learn in the full text review can help you structure your template. As always, plan ahead!

5.5.3 Risk of Bias Assessment

There are two ways to set up your Risk of Bias Assessment tab:

- manually

- automatically.

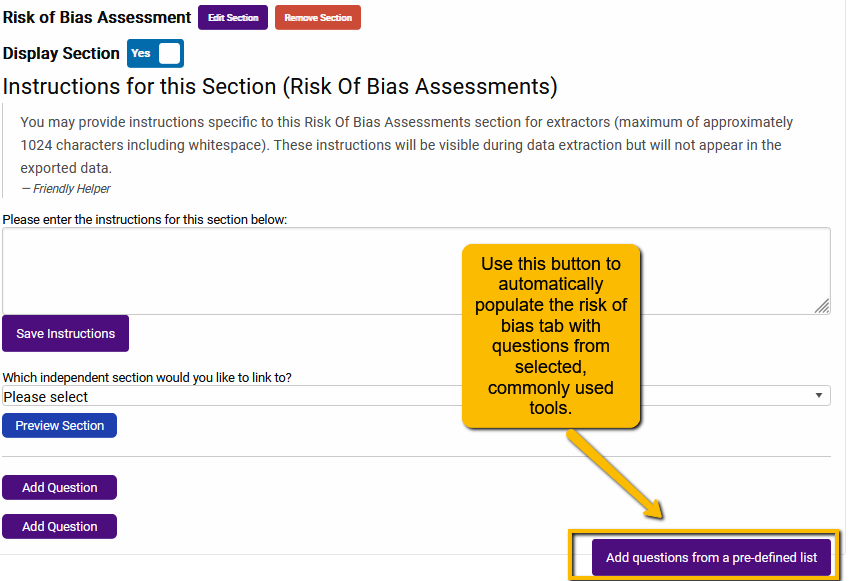

In order to manually set up your risk of bias questions, you would create the list of questions just as you would for other tabs. We recommend that if you are using this approach that you work closely from the instrument guidance document to ensure you have the correct structure as well as provide contextualized instructions for your analysts.

However, in order to make life a little easier, SRDR+ provides a structured entry method for some of the most commonly used risk of bias instruments. The available instruments for automatic set up are:

Randomized Studies

- Revised Cochrane risk-of-bias tool for randomized trials (RoB 2)

- The RoB 2.0 Tool (cluster randomize, parallel group trials)

- Cochrane ROB (original)

- Jadad Controlled Clinical Trials 17:1-12 (1996) (not recommended)

Nonrandomized Studies

- The Risk of Bias In Non-randomized Studies–of Interventions (ROBINS-I) assessment tool (Cohort-type studies)

- Modified Newcastle-Ottawa Quality Assessment Scale – Case Control Studies

- Modified Newcastle-Ottawa Quality Assessment Scale – Cohort Studies

- EPC (AHRQ Evidence-Based Practice Centers) Common Dimensions

- McMaster Quality Assessment Scale for Harms (McHarm)

Diagnostic Accuracy Studies

- Stard – quality for diagnostic tests (not recommended as STARD is a writing guide and not a true risk of bias tool)

- QUADAS2

Setting up any of the above instruments is easy. On the Risk of Bias Assessment page of the Extraction Template builder, you will see a button “Add questions from a pre-defined list”:

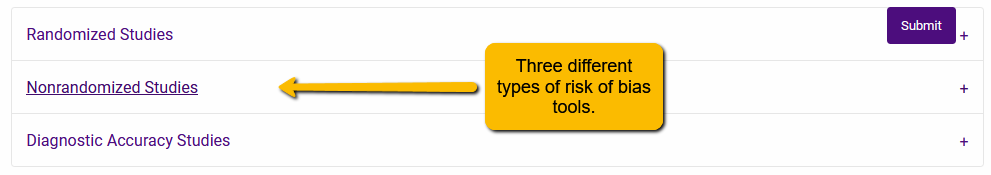

Clicking on this button will open a dialogue with the three different types of risk of bias tools:

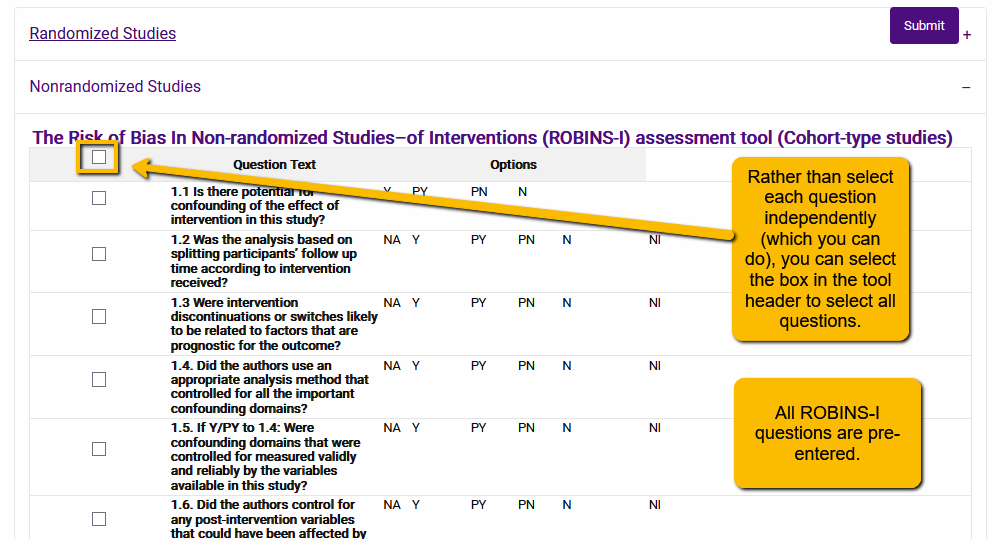

Click on the plus sign to open the set of tools under the appropriate option. For instance, let’s say that we wanted to add the ROBINS-I tool to our extraction template. We would expand the Nonrandomized Studies section and scroll to the ROBINS-I tool:

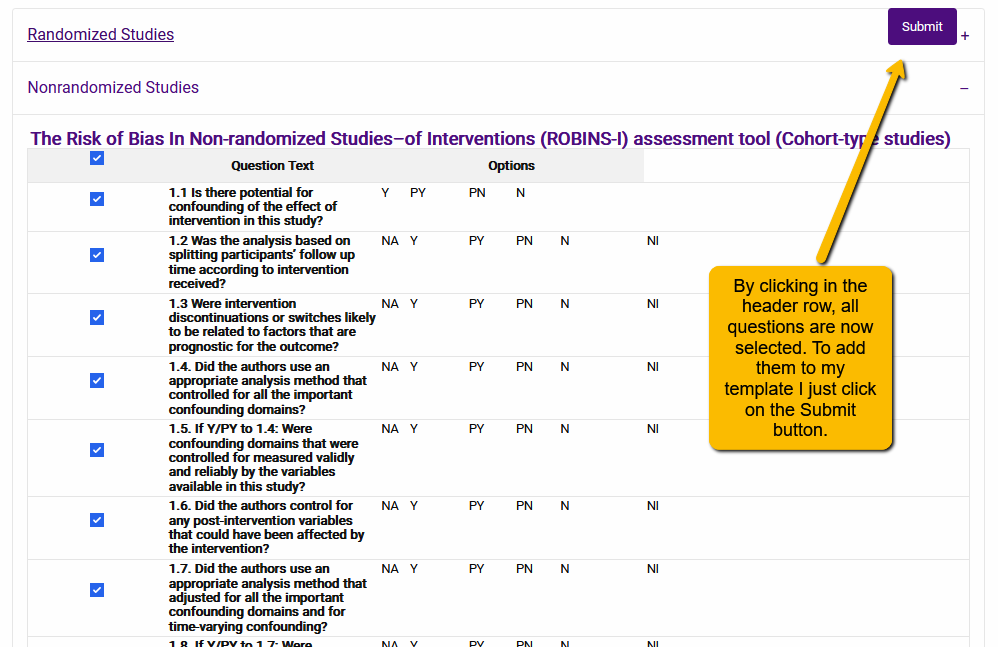

By selecting the box in the gray header row, you will select all questions associated with that tool. Now, click the Submit button to add all the questions to your extraction template.

Two additional points to note:

You can edit the pre-defined questions. Even if you use the tool to automatically populate your risk of bias tool, you can still edit the questions on the tab. Generally, you would want to not modify the standard tools, but their may be situations in which this is useful:

- When the tool contains an “other bias” question (e.g., the original Cochrane RoB) and you want to be more specific about risks relevant to your particular project.

- When you need to repeat questions (e.g., to evaluate different outcomes separately– see below).

You can add more than one tool. This is relevant when your project contains more than one type of study design. For instance, if your project includes both randomized and non-randomized interventions, you may want to add both Cochrane ROB 2.0 and ROBINS-I.

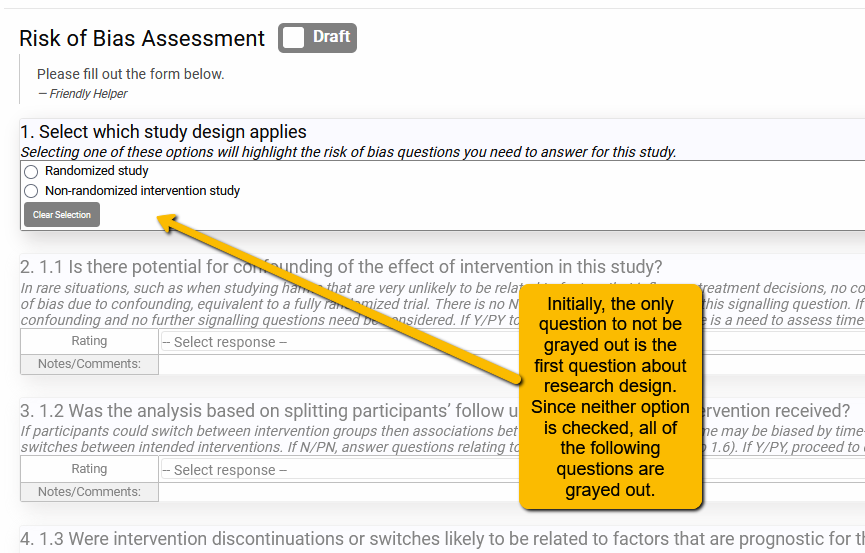

Happily, you can add multiple risk of bias tools to your Risk of Bias tab. This could be confusing for data extractors since they may not know exactly which questions apply to which designs. But you can get around this problem by including a screening question at the top of the Risk of Bias tab and then using the Dependencies function to turn on only the questions that apply to that particular design (see SRDR+ 5. Tips for Creating a Data Extraction Template). It is a little bit of work setting it up, but remember, you didn’t have to build out all the risk of bias questions by hand, so it’s not terrible.

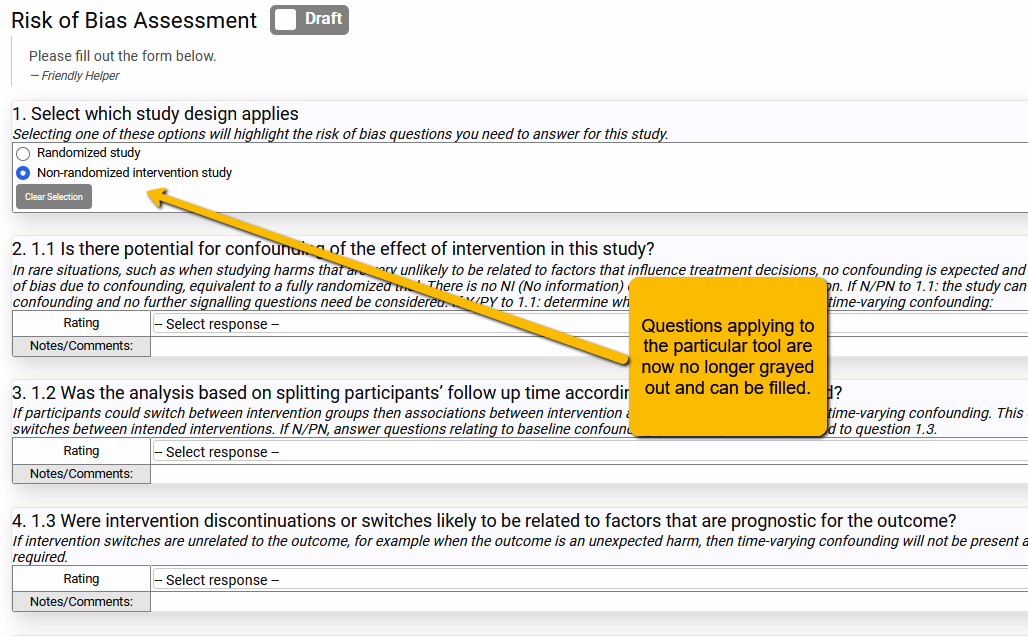

In the example below, we included both the ROBINS-I (for non-randomized studies) and the Cochrane ROB 2.0 tool (for randomized studies). We added dependencies to all the questions so that only those that apply to the selected tool highlight. By selecting one of the options in the screener questions, the data extractor will then “turn on” only the questions that apply to that design.

However, when one of the options is selected:

5.5.4 Repeating the Question by Arm or Outcome

Imagine a scenario: You are needing to capture the different demographic characteristics for the different arms (e.g., the sex distribution could be different for each arm). Additionally, you are needing to capture the particular mix of intervention components for the different arms. This means you will need to repeat questions on the Sample Characteristics tab and the Arm Details tab for each arm.

While you can simply repeat the question once for each arm (so duplicate the questions for intervention and control arms), this will be a problem if some of your studies have more than two arms (e.g., two intervention arms plus a control arm).

A better way to do this is to capitalize on a functionality within SRDR+ that allows you to link some tabs (dependent tabs) to other tabs (independent tabs: Arms and Outcomes) so that questions will repeat as often as needed. This means that you will only have to set up the question once, but it will repeat within the dependent tab as often as necessary.

Here is where that functionality comes into play.

Let’s say you want to:

- repeat every question on the Arms Details tab for each arm (e.g., if different interventions are carried out on different arms),

- repeat every question on the Sample Characteristics tab for each arm,

- repeat every question on the Outcome Details tab for each outcome (e.g., if you want to capture covariates separately for each outcome),

- carry out a separate risk of bias by outcome rather than for the whole article.

We will now show you how to setup your extraction template to do this.

5.5.3.1 Repeating Arm Details and Sample Characteristics Questions for Each Arm

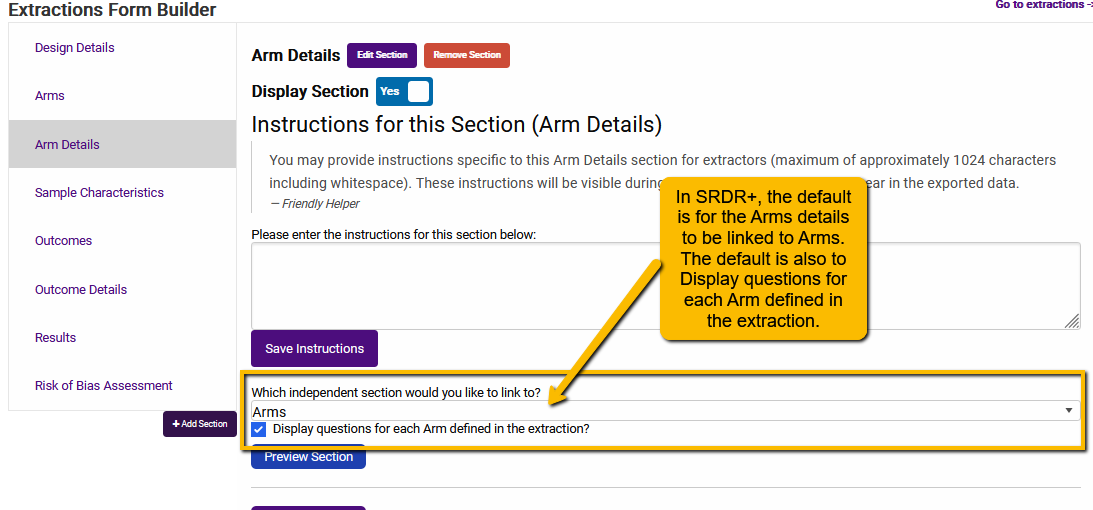

On the Arms Details tab or the Sample Characteristics tab of the Extraction Template builder, you will see a section that asks, “Which independent section would you like to link to?”

This is the default setting in SRDR+ for both Arm Details and Sample Characteristics tabs.

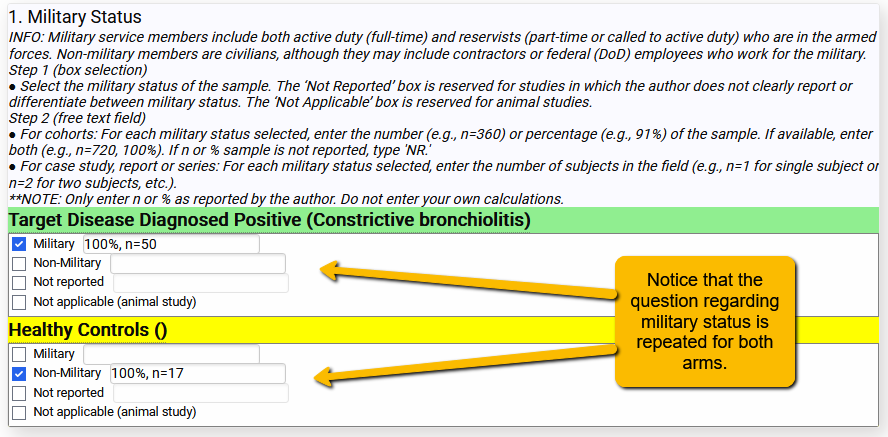

What does this look like on the front end? See the following example where the study compared soldiers deployed to the Southwest Asia Theater of Operations to healthy controls:

So, rather than create the question twice in the extraction template, the question only needs to be entered into the template once but will repeat for each arm. This is particularly handy when the number of arms might vary (e.g., 2 arms versus 3 arms).

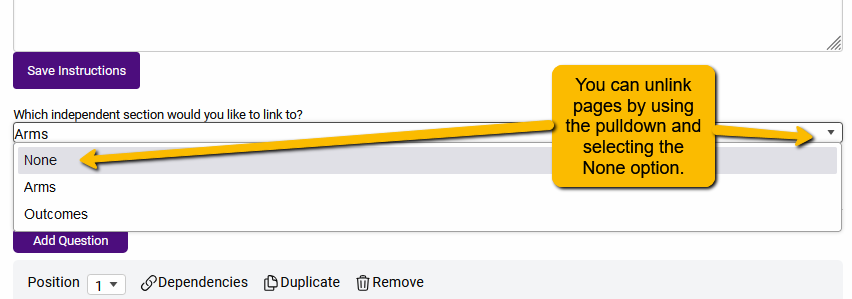

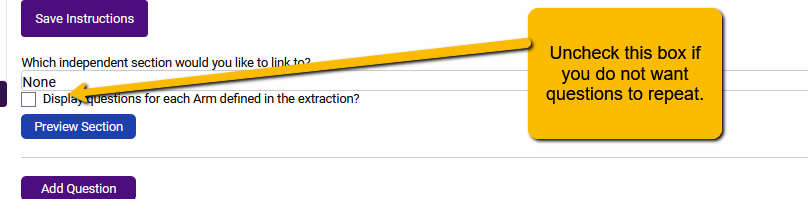

Depending on your project, you may not need or want questions to repeat. In this case, you can simply change the setting to prevent repeating questions. To change this, we can alter the settings so that Arm Details are not linked to Arms and questions do not repeat.

Additionally, you will want to uncheck the Display questions for each Arm defined in the extraction.

With these settings in place, the questions on the Arm Details tab will not repeat.

Note: for most projects where you will be comparing arms, you will likely want to keep the SRDR+ default settings in place.

5.5.3.2 Repeating Outcome Details Questions for Each Outcome

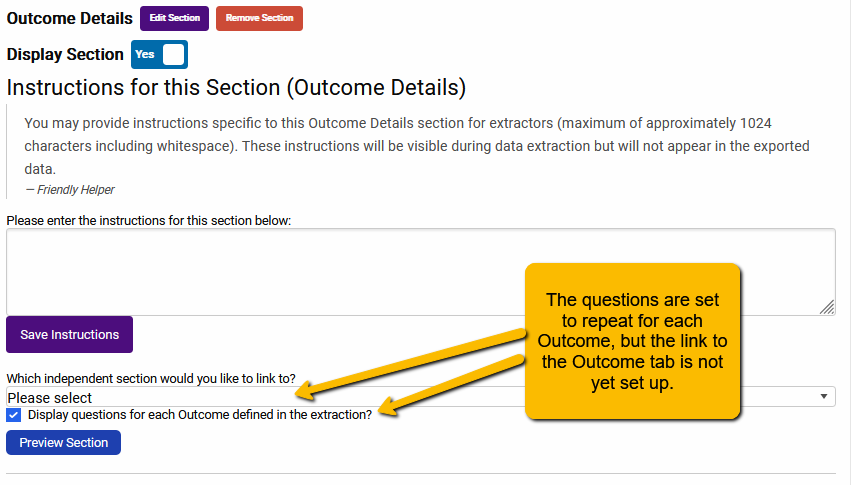

The default setting for Outcome Details is a bit different than Arms Details. When you first open the Outcome Details tab in the Extraction Builder, you will see the following:

To ensure that the Outcome Details question will repeat for every outcome, we will use the pulldown to link to the Outcomes tab.

Note: if, as in the earlier example, you want to have separate structured questions that capture the measurement options for different outcomes, you will NOT want to repeat each question (e.g., the measurement tools to capture body composition are different than the measurement instruments used to capture pain level).

5.5.3.3 Separate Risk of Bias Assessment for Each Outcome

If you are following the US Agency for Healthcare Research and Quality standards for evaluating risk of bias, you will need to evaluate risk with respect to each outcome separately:

“Allow for separate risk of bias ratings by outcome to account for outcome-specific variations in detection bias and selective outcome reporting bias. Categories of outcomes, such as harms and benefits, may have different sources of bias.” (See Assessing the Risk of Bias of Individual Studies in Systematic Reviews of Health Care Interventions)

Similarly, Cochrane requires separate risk of bias evaluation for each outcome.

But, how to do this within SRDR+?

Unlike Arm Details or Outcome Details tabs, the default setting in SRDR+ for Risk of Bias Assessment is to not repeat questions by outcome. Here are the current default settings in SRDR+:

Linking to Outcomes is done the same way as described above: simply use the pulldown to link to the Outcomes tab.

Note: At the time of writing, even with the ROB section linked to the Outcomes tab, the questions will not automatically repeat. Thus, we have to use a workaround.

Not every question needs to be repeated for every outcome. For example, when using the Cochrane ROB 2.0 risk of bias tool, while it is possible that questions regarding blinding of participants to differ by outcome (e.g., a trial that combines a drug treatment [easily blinded] for cancer along with a behavioral intervention [not blinded] for a quality of life outcome), it is difficult to imagine randomization or allocation concealment questions differing by study outcome. However, questions pertaining specifically to outcome assessment (e.g., Domain 6 in the ROBINS-E instrument) will almost certainly differ depending on the outcome in question.

Thus, while some questions will need to be repeated for each key outcome, other questions will not.

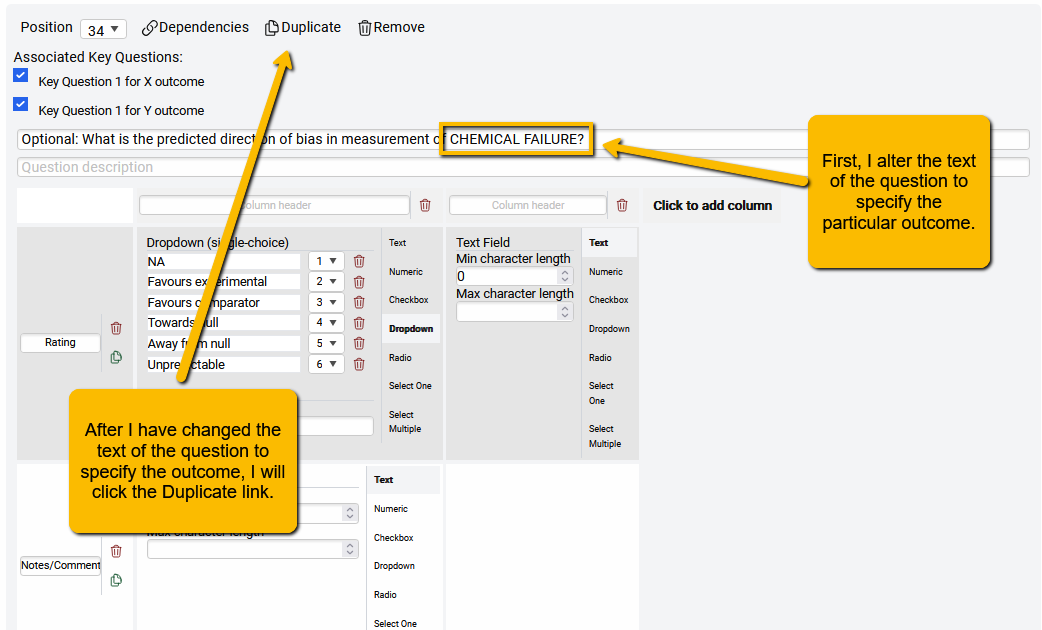

Work around solution: Once you have set up the questions (for example, using the Add questions from a pre-defined list button, or by hand), you can manually add additional questions relevant for the different outcomes. In the following example, we added the Cochrane ROB 2.0 questions using the “Add questions from a pre-defined list” button. But we want to ask the question “What is the predicted direction of bias in measurement of the outcome?” question separately for two separate outcomes, “chemical failure” (i.e., return of cancer biomarkers after treatment) and “quality of life”.

First, we scroll down to the question to be repeated.

Second, we alter the text of the question to specify which outcome the question refers to.

Third, we click the Duplicate link to create a duplicate of this question (the new question will appear at the end of the ROB questions.

Fourth, I now scroll down to the new question and change “Chemical failure” to “Quality of Life”:

Optional: If you want the new question to follow the duplicated question, you can use the Position pulldown to change the question order. In this example, since the “Chemical failure” question is #34, I can change the “Quality of Life” question to #35.

Note: the use of all caps in the above examples is not necessary. It is a convention that we use to more clearly signal to the extractors/evaluators the different outcomes.

Important Considerations for Evaluating ROB by Outcome

Which questions should be repeated? There is no clear direction for this, but, in general, consider the specific questions (and Domains) within the risk of bias tool you are using and determine which are likely or possibly going to have different assessments for different outcomes. It is important to discuss and plan with the team and especially the team methodologist ahead of time to determine which risk of bias questions should be repeated for your project.

What is the optimal placement for repeated questions? Again, there is no direction for this. It may be easier for your analysts to consider each of the questions sequentially, in which case you would likely want to keep the repeated questions next to each other in the risk of bias instrument. Alternatively, you could have repeated questions at the end of your risk of bias tool. A good strategy is to get input from your team regarding the structure they would find the most beneficial.

Can I assume analysts understand how to evaluate different outcomes? In a word: No. Training and team discussion of the differences between the different outcomes (how they are measured, possible directions of bias, etc.) is critical. It is best to do the training ahead of time with a few example articles. Review analyst responses and discuss differences.